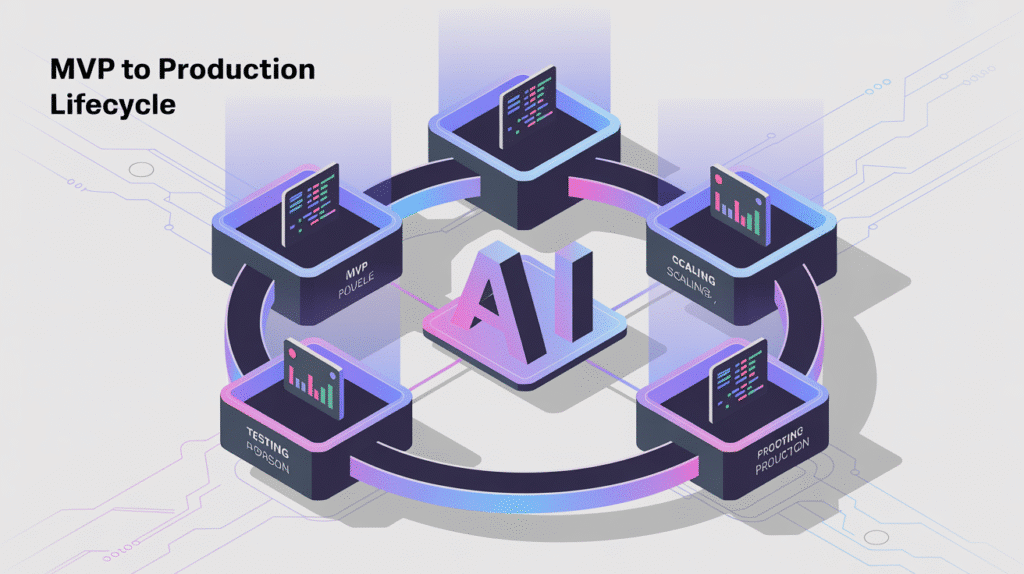

The path from a promising AI MVP (Minimum Viable Product) to a robust, production-ready AI system can seem overwhelming. Many organizations celebrate early wins with successful proofs of concept, only to face new hurdles during full-scale deployment. This transition—the notorious AI gap—presents both potential and risk. Navigating it requires more than technical skills; it calls for solid frameworks, strategic planning, and a clear focus on measurable results.

Why does the gap between MVP and production matter?

Organizations invest heavily in AI MVP development, using pilots or prototypes to validate ideas. While these initial steps generate excitement, moving beyond MVP often reveals unexpected complexities. Scaling a solution originally built for small datasets into something resilient and enterprise-grade introduces challenges that are frequently underestimated.

At scale, issues around data pipelines and management, infrastructure, and process consistency become apparent. If this knowledge and capability divide is not addressed, even the most innovative prototypes may never reach their intended impact. Stakeholders require clarity on what differentiates a functional prototype from a fully operational AI product.

Identifying and managing risks when scaling from MVP to production

Transitioning from MVP to production uncovers hidden risks—both technical and organizational. Effectively managing them starts with understanding their sources and implications as ambitions grow within an enterprise AI strategy.

Risk mitigation is essential to maintain performance, security, and user experience as deployments scale. Anticipating challenges enables teams to act quickly and avoid costly setbacks throughout the journey.

What are common technical risks?

Scaling from MVP to production brings technical risks such as unstable codebases, weak data pipelines, and insufficient automation for integration. As data volumes increase, quality can suffer, undermining model accuracy and reliability.

Performance optimization emerges as another challenge. Models tuned for controlled environments may struggle under real-world loads, threatening business continuity. Addressing infrastructure bottlenecks and establishing effective monitoring systems are also vital components for success.

How do operational and adoption challenges affect success?

Adoption challenges and ROI are closely linked. Overlooking human-centered AI design can create resistance among users. Trust, transparency, and seamless integration play crucial roles in encouraging widespread adoption.

Change management cannot be ignored. Training, security policies, and alignment across departments must match the technological leap. Without strong operational strategies, even advanced solutions risk falling short in terms of usage and results.

Frameworks for engineering and delivery: building for full production

Long-term, scalable AI deployment demands structure. Proven engineering and delivery frameworks close the AI gap by providing repeatable steps and best practices, supporting reliable outcomes at every phase. Building for production involves establishing robust foundations from day one.

A clear roadmap helps guide AI projects through complexity, fostering collaboration between developers, domain experts, and stakeholders. This approach transforms prototypes into valuable assets rather than isolated experiments. To scale efficiently, businesses turn to AI solutions development services from 8allocate, ensuring a reliable path from prototype to production.

Which tools can streamline data pipelines and management?

Data is the backbone of any AI solution. Efficient, scalable data pipelines and management automate collection, cleaning, verification, and movement of large datasets. These automated processes help reduce errors and support compliance—critical requirements for production environments.

Leading frameworks coordinate distributed storage, transformations, and batch processing in unified systems. Effective orchestration ensures data remains accurate and up to date, allowing models to continuously learn and adapt without interruption.

How does performance optimization drive reliability?

Once AI projects move out of the test lab, continuous performance optimization becomes non-negotiable. Automated monitoring of latency, throughput, and resource usage allows teams to address issues before they escalate.

Improvement cycles extend beyond software tweaks to include hardware upgrades, hyperparameter tuning, and disaster recovery planning. This ongoing process keeps performance aligned with business needs, maintaining a healthy feedback loop throughout the project lifecycle.

The process of proof of concept (POC) and validation for AI solutions

The proof of concept (POC) stage demonstrates whether an idea works using minimal resources in a controlled setting. Validation extends this by testing effectiveness with real-world data and broader measures, preparing the project for tougher production requirements.

Progressing past POC means more than having working code. Teams must document processes, allocate scalable resources, and benchmark against clear KPIs. A methodical approach, where each milestone builds toward readiness, greatly increases the chances of successful deployment.

- Demonstrate key outcomes under sample workloads

- Align prototype capabilities with target business goals

- Document dependencies and potential integration roadblocks

- Develop a pathway from functional POC to validated alpha release

Deployment best practices for confident scaling

Deployment involves more than simply launching scripts on servers. Careful orchestration and constant oversight contribute to stability and user trust at every stage. Following established deployment best practices reduces surprises and supports smooth scaling.

Continuous delivery strengthens resilience, enabling incremental rollouts and rapid rollback if needed. Proactive monitoring and anomaly detection keep systems healthy and provide critical feedback throughout the transition process.

| Best practice | Description |

|---|---|

| Automated testing | Ensures functionality and reliability before every release, catching regressions early. |

| Shadow deployments | Runs new code alongside legacy systems for comparison, limiting exposure if issues arise. |

| Gradual feature rollout | Phases production launches to control risk and gather live feedback incrementally. |

| Robust monitoring | Provides end-to-end visibility for quick detection of bottlenecks and performance drops. |

| Clear rollback procedures | Speeds recovery if unexpected failures threaten operations, minimizing downtime. |

Human-centered AI design for higher adoption

User experience stands at the core of every production-ready AI system. True human-centered AI design considers communication, transparency, and graceful handling of exceptions—not just interface elements. Adapting experiences for diverse users reinforces trust and boosts engagement across all teams.

Gathering feedback during pilot phases reveals blind spots and highlights practical pain points. Adjusting interfaces based on this input shows a genuine commitment to usability. Insights gained collectively shape future releases, making adoption challenges manageable instead of overwhelming.

Establishing enterprise AI strategy for long-term growth

Pilots deliver short-term gains, but lasting value comes from a well-defined enterprise AI strategy. Integrating business context, technology advances, and ethical considerations provides a foundation for sustained investment in intelligent solutions.

This perspective drives cross-functional alignment, spreading responsibility across product, legal, and compliance teams. Organizations reaching production maturity understand that true AI benefits emerge only when everyone contributes to governance, innovation, and operational discipline.

What role does ROI assessment play in strategy?

ROI evaluation determines which initiatives take priority. Measuring everything from efficiency improvements to customer satisfaction aligns technical decisions with top-level business objectives. Realistic projections keep teams focused as deployments evolve.

An effective strategy weaves cost-benefit analysis into each checkpoint, reducing waste and maintaining momentum. Consistently delivering measurable value cements AI’s reputation within the organization.

How does leadership shape AI adoption?

Leadership sets the tone for data-driven culture. Active involvement accelerates change, breaking down silos and creating opportunities for knowledge sharing. Comprehensive onboarding encourages staff to engage confidently with emerging technologies.

Visionary leadership guides teams through obstacles, embedding AI capabilities into daily routines. With strategic intent, experimentation gives way to coordinated progress as organizations master scaling from MVP to production.